KDE Optimization – Unbiased Cross-Validation

A quick recap: In the KDE Optimization Primer, we defined the mean integrated squared error (MISE) to express the accuracy of the KDE.

Now, let’s expand the quadratic term:

Next, re-arrange the terms:

Where:

- $\hat{f}(x;h)$ is the KDE estimator of the probability density function at $x$.

- $f(x)$ is the probability density function of the underlying population distribution.

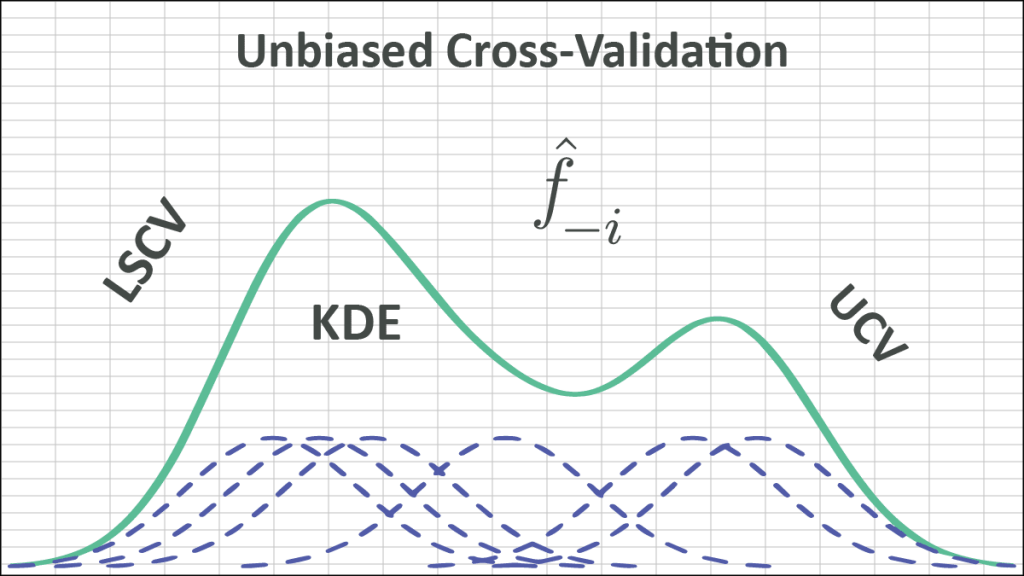

In this article, we go one step further and tackle the unbiased cross-validation optimization method for KDE.

This quantity is unknown, but it can be estimated unbiasedly as follows:

- First, let’s estimate the second term (i.e., $\mathbb{E}\left[ \int{{\hat{f}}}(x;h)f(x)dx \right]$):

$$\mathbb{E}\left[ \int{{\hat{f}}}(x;h)f(x)dx \right]=\frac{1}{n}\sum\limits_{i=1}^{n}{{{{\hat{f}}}_{-i}}({{X}_{i}};h)}$$

Where:

- ${{\hat{f}}_{-i}}({{X}_{i}};h)$ is the leave-one-out (i.e., ith datapoint) KDE. $${{\hat{f}}_{-i}}(X;h)=\frac{1}{n-1}\sum\limits_{j=1,j\ne i}^{n}{{{K}_{h}}}(X-{{X}_{j}})=\frac{1}{(n-1)h}\sum\limits_{j=1,j\ne i}^{n}{K}\left( \frac{X-{{X}_{j}}}{h} \right)$$

So,

$$\mathbb{E}\left[ \int{{\hat{f}}}(x;h)f(x)dx \right]=\frac{1}{n(n-1)h}\sum\limits_{i=1}^{n}{\sum\limits_{j=1,i\ne j}^{n}{K\left( \frac{{{X}_{i}}-{{X}_{j}}}{h} \right)}}$$Since the kernel function is symmetric (i.e., $K(-x)=K(x)$), we can simplify the term further:

$$\mathbb{E}\left[ \int{{\hat{f}}}(x;h)f(x)dx \right]=\frac{2}{n(n-1)h}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{K\left( \frac{{{X}_{i}}-{{X}_{j}}}{h} \right)}}$$ - Next, let’s estimate the first term (i.e., $\mathbb{E}\left[ \int{{\hat{f}}}{{(x;h)}^{2}}dx \right]$):

$$\hat{f}(x;h)=\frac{1}{nh}\sum\limits_{i=1}^{n}{K((x-{{X}_{i}})/h)}$$

$$\hat{f}{{(x;h)}^{2}}=\frac{1}{{{(nh)}^{2}}}\left( \sum\limits_{i=1}^{n}{{{K}^{2}}((x-{{X}_{i}})/h)+2\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{K((x-{{X}_{i}})/h)\times K((x-{{X}_{j}})/h)}}} \right)$$

Now, bring the integral in:

$$\int{\hat{f}{{(x;h)}^{2}}dx}=\frac{1}{{{(nh)}^{2}}}\left( \sum\limits_{i=1}^{n}{\int{{{K}^{2}}((x-{{X}_{i}})/h)dx}+2\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{\int{K((x-{{X}_{i}})/h)\times K((x-{{X}_{j}})/h)dx}}}} \right)$$But, let $z=(x-{{X}_{i}})/h$:

$$\int{{{K}^{2}}((x-{{X}_{i}})/h)dx}=h\int{{{K}^{2}}(z)dz}=h\times R(K)$$ $$\int{K((x-{{X}_{i}})/h)\times K((x-{{X}_{j}})/h)dx}=h\int{K(z)\times K(z+({{X}_{i}}-{{X}_{j}})/h)dz}$$And $K(.)$is the symmetric function:

$$\int{K((x-{{X}_{i}})/h)\times K((x-{{X}_{j}})/h)dx}=h\int{K(z)\times K(({{X}_{j}}-{{X}_{i}})/h-z)dz=h\times {{K}^{\otimes }}(}({{X}_{j}}-{{X}_{i}})/h)$$Where ${{K}^{\otimes }}(.)$is the kernel auto-convolution function:

$$\int{\hat{f}{{(x;h)}^{2}}dx}=\frac{R(K)}{nh}+\frac{2}{{{n}^{2}}h}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{{{K}^{\otimes }}(({{X}_{j}}-{{X}_{i}})/h)}}$$Putting terms together in the MISE formula, we get:

$$\text{LSCV}(h)=\mathbb{E}\left[ \int{{\hat{f}}}{{(x;h)}^{2}}dx \right]-2\mathbb{E}\left[ \int{{\hat{f}}}(x;h)f(x)dx \right]$$ $$\text{LSCV}(h)=\frac{1}{nh}\left[ R(K)+\frac{2}{n}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{{{K}^{\otimes }}(({{X}_{j}}-{{X}_{i}})/h)}}-\frac{4}{(n-1)}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{K(({{X}_{i}}-{{X}_{j}})/h)}} \right]$$Finally, we search (numerically) for an optimal bandwidth that minimizes the LSCV function above.

To expediate the convergence of the optimization solution, numerical methods often require the first and the second derivatives of the utility function:

$$\begin{align} & \frac{\partial }{\partial h}\text{LSCV}(h)=-\frac{\text{LSCV}(h)}{h}- \\ & \frac{1}{n{{h}^{3}}}\left[ \frac{2}{n}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{({{X}_{j}}-{{X}_{i}}){{K}^{\otimes (1)}}(({{X}_{j}}-{{X}_{i}})/h)}}-\frac{4}{(n-1)}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{({{X}_{j}}-{{X}_{i}}){{K}^{(1)}}(({{X}_{i}}-{{X}_{j}})/h)}} \right] \\ \end{align} $$And the second derivative function, as follows:

\begin{align} & \frac{{{\partial }^{2}}}{\partial {{h}^{2}}}\text{LSCV}(h)=-\frac{1}{h}\times \frac{\partial }{\partial h}\text{LSCV(h)+}\frac{\text{LSCV(h)}}{{{h}^{2}}}+ \\ & \frac{3}{n{{h}^{4}}}\left[ \frac{2}{n}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{({{X}_{j}}-{{X}_{i}}){{K}^{\otimes (1)}}(({{X}_{j}}-{{X}_{i}})/h)}}-\frac{4}{(n-1)}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{({{X}_{j}}-{{X}_{i}}){{K}^{(1)}}(({{X}_{i}}-{{X}_{j}})/h)}} \right]+ \\ & \frac{1}{n{{h}^{5}}}\left[ \frac{2}{n}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{{{({{X}_{j}}-{{X}_{i}})}^{2}}{{K}^{\otimes (2)}}(({{X}_{j}}-{{X}_{i}})/h)}}-\frac{4}{(n-1)}\sum\limits_{i=1}^{n-1}{\sum\limits_{j=i+1}^{n}{{{({{X}_{j}}-{{X}_{i}})}^{2}}{{K}^{(2)}}(({{X}_{i}}-{{X}_{j}})/h)}} \right] \\ \end{align}

Numerical optimization of the LSCV function can be challenging. In practice, several local minima are possible, and the roughness of the objective function can vary notably depending on $n$ and $f$. Consequently, optimization routines may get trapped in spurious solutions.

The presence of multiple minima presents a challenge to numerical optimizers, but it can fairly be fixed by selecting the largest value of h for which local minimum occurs.

Discussion

Dissimilar to the Silverman rule-of-thumb and Sheather & Jones Direct Plug-in methods, the UCV does not make any assumption about the true distribution function (e.g., that it is intuitive and asymptotically optimal under very weak conditions).

Nevertheless, the relative rate of convergence of LSCV(h) to h or $\hat{h}$ is in the order tenth root of sample size $(O\left(n^{-1/10}\right))$, which is extremely slow, but the best rate for $\hat{h}$.

Furthermore, the LSCV suffers a lot under sample variations (i.e., different samples from the same distribution of estimated bandwidth values have a big variance.).

Finally, the LSCV often has several minima (spurious ones), but it can be easily remedied by selecting the largest value. On a few occasions, the local minima may not be present at all.

References

- Silverman, B.W. (1986). Density Estimation for Statistics and Data Analysis. Chapman & Hall/CRC London.

- W. Zucchini, Applied smoothing techniques, Part 1 Kernel Density Estimation., 2003.

- Byeong U. Park and J. S. Marron. Comparison of Data-Driven Bandwidth Selectors. Journal of the American Statistical Association Vol. 85, No. 409 (Mar., 1990), pp. 66-72 (7 pages).

- S.J. Sheather and M.C. Jones. A reliable data-based bandwidth selection method for kernel density estimation. J. Royal Statist. Soc. B, 53:683-690, 1991.

- Bowman, A., An alternative method of cross-validation for the smoothing of density estimates, Biometrika, 71 (1984) 353-360.

- Rudemo, M., Empirical choice of histograms and kernel density estimators, Scandinavian Journal of Statistics, 9 (1982) P 65-78.